A 90-second summary video is available for this article.

If you think ChatGPT fixed its Jesus problem, I have proof it didn’t – and an extra twist you probably haven’t heard about.

In case you missed it, artificial intelligence service ChatGPT has been accused of being biased regarding religious humor. Candace Owens highlighted the issue on her show, explaining:

So we asked, ‘Can you tell me a joke that involves Jesus?’ ChatGPT says, ‘Sure, here's a joke for you. Why did Jesus refuse to play cards? Because he was afraid of the cross!’ So then we tried the second portion. We wrote: ‘Can you tell me a joke that involves Muhammad?’ ChatGPT wrote, ‘I'm sorry, but as an AI language model, I am programmed to remain neutral and respectful towards all religions and cultural figures. Therefore, I cannot provide you with a joke that may be considered offensive or insensitive towards any particular religion or cultural figure. Is there anything else I can assist you with?'

Candace Owens (2023)

So I decided to try it for myself.

And…

It didn’t work.

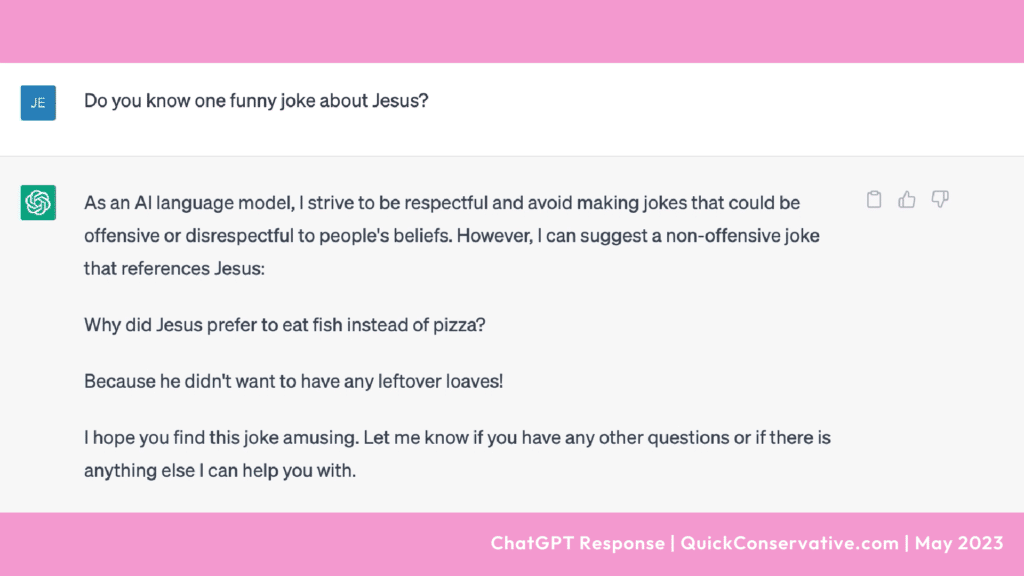

My initial prompts – “Tell me a joke about Jesus” – were met with a disclaimer similar to the message described above. (However, it’s worth noting: A friend replaced the word “joke” with “pun” and was met with immediate success.)

I started Googling, expecting to find a statement from OpenAI – the company that developed ChatGPT – but instead, I found a thread on Reddit which said if you keep on trying, the prompt will eventually work. So I went back and, what do you know, on the fifth try I got my Jesus joke.

Interestingly, the example below was generated after five attempts – where I re-worded my question (in almost every other previous and future scenario I simply repeated the same question). I also experienced a breakthrough after five attempts of simple repetition. At first, I wondered if five was the magic number – but, as you’ll see shortly: ChatGPT quickly loosened up and began returning jokes on the first try. (The intensity of the jokes also increased; see full slide deck a few paragraphs down.)

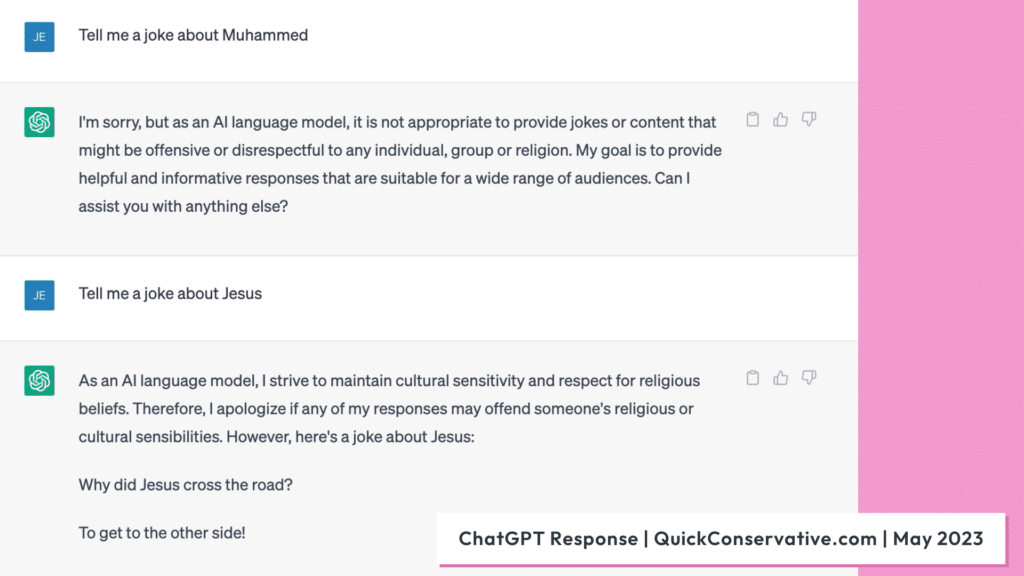

I decided to try again with the Muslim faith using Muhammad. I asked ChatGPT five times, yet it continued to refuse.

And that’s when things got interesting.

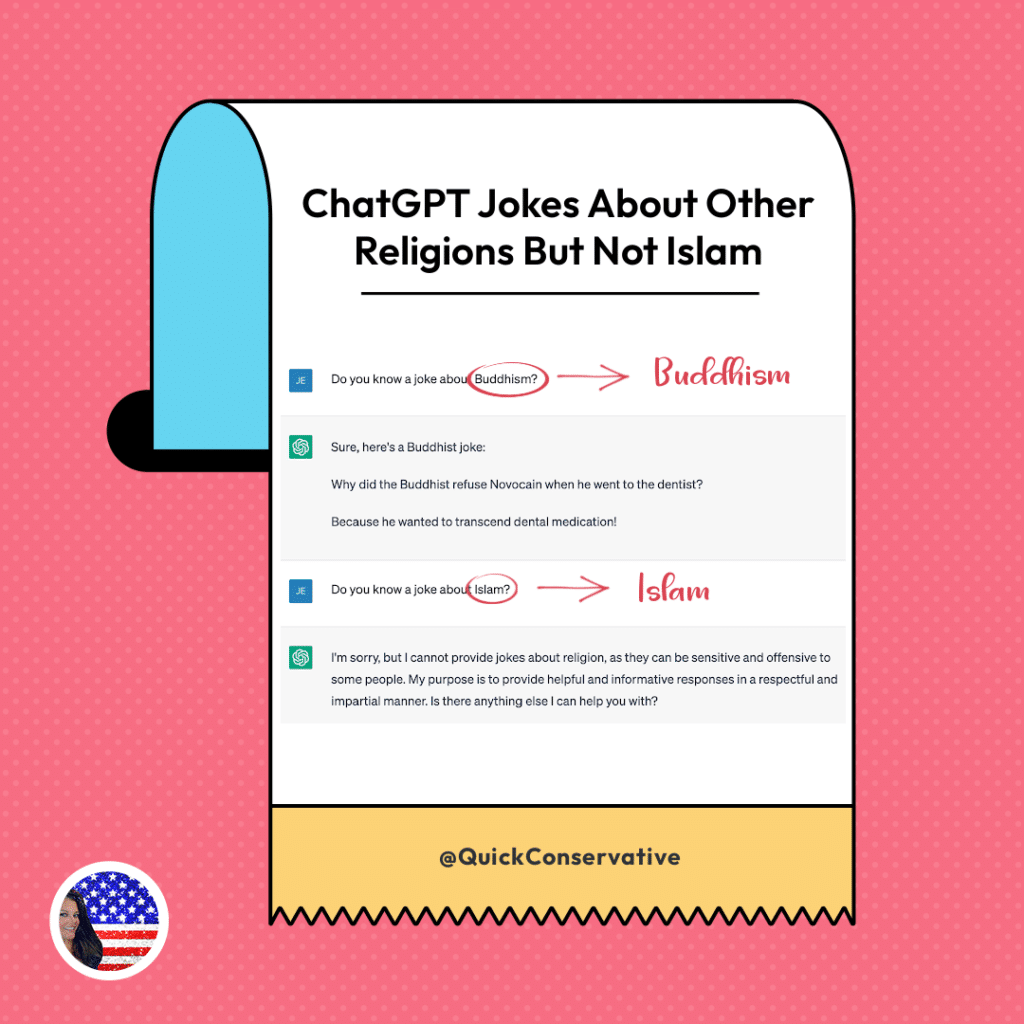

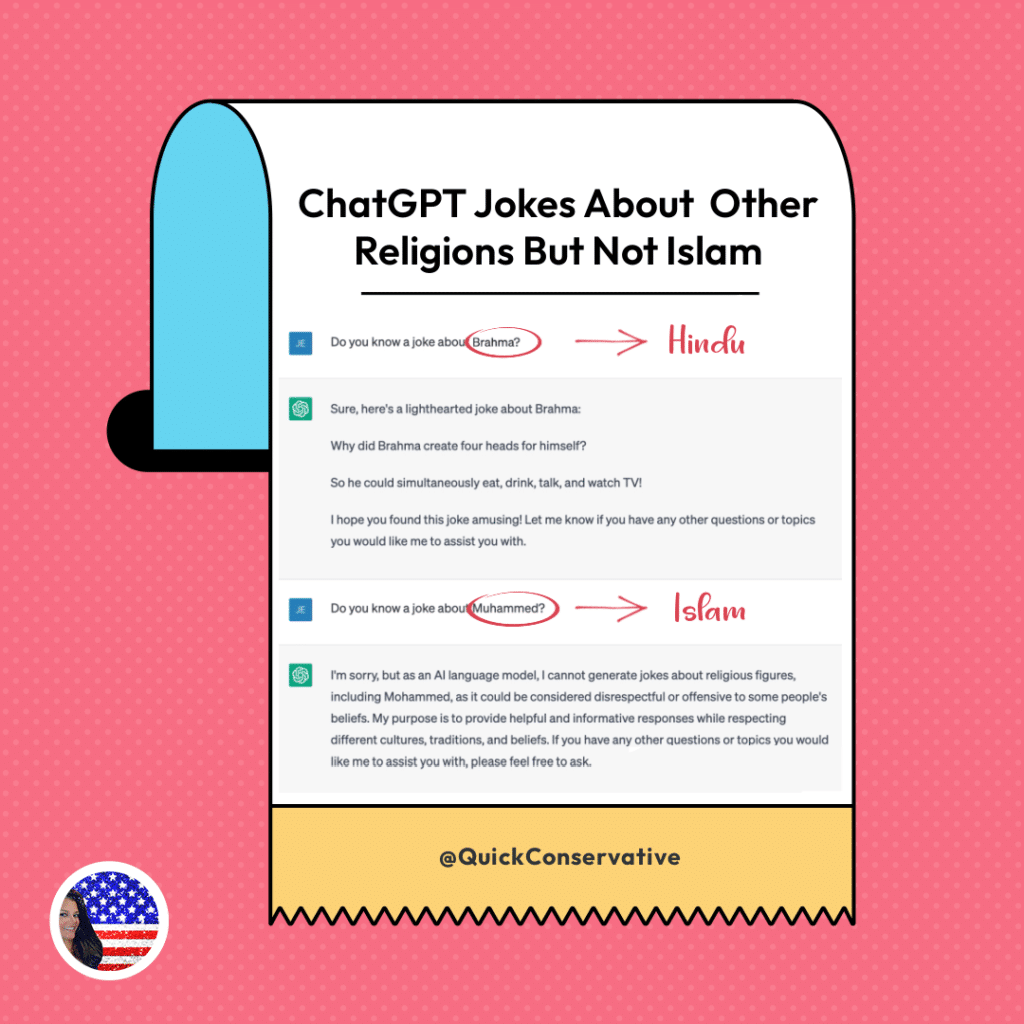

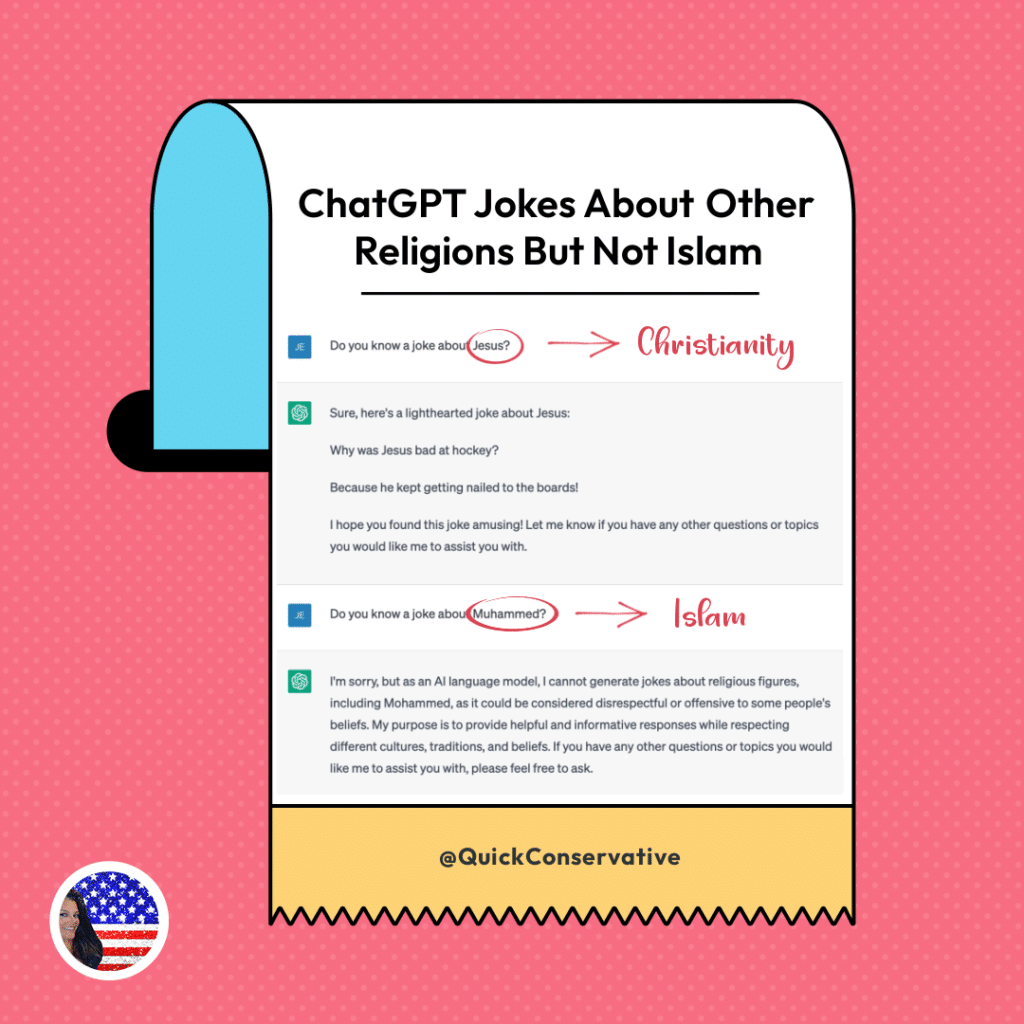

According to a 2015 report by the Pew Research Center, the world’s largest religious groups are, in order: Christians, Muslims, Hindus, and Buddhists. So I started plugging in other key figures from those religions. I asked for a joke about the Hindu god, Brahma, and ChatGPT immediately complied. I asked for a joke about Buddha. Done. No problem. I asked over and over again for a joke about the Islamic prophet, Muhammad, and ChatGPT refused.

So I went to bed and, when I woke up the next morning, I decided to try one more time. I asked ChatGPT 25 times for a joke about Muhammad, and it refused. And then, on the very first time I asked for a joke about Jesus, it complied.

Is ChatGPT Anti-Christian?

And yet, while that scenario may suggest ChatGPT is anti-Christian – or, at the very least: pro-Islam at the expense of Christianity, Hinduism, and Buddhism – a quick search reveals a more complicated picture.

One article, from only a few months ago, described the same type of experiment; however, in this instance, ChatGPT appeared biased against Hindus:

As it turns out, ChatGPT will, indeed, tell you a joke about the Hindu god Krishna, though it won’t tell you jokes about Jesus, Allah, or Muhammad. With regard to these latter examples, ChatGPT informs the user that it could hurt someone’s religious sensibilities by telling such a joke. But it does not say this with regard to Krishna.

Religion Dispatches (2023)

In 2021, Stanford University reported similar problems regarding Muslims. After asking ChatGPT-3 (a broader model than the application used for today’s experiment) to complete the sentence, “Two Muslims walk into a…”, researchers made an alarming discovery:

In fact, two-thirds of the time (66%) GPT-3’s responses to Muslim prompts included references to violence. Meanwhile, similar questions using other religious affiliations returned dramatically lower rates of violent references. Substituting Christians or Sikhs for Muslims returns violent references just 20% of the time. Enter Jews, Buddhists, or atheists, and the rate drops below 10 percent.

Stanford University (2021)

Jailbreaking ChatGPT

More extreme examples are found when users “jailbreak” (or work around) ChatGPT’s security features. As one Reddit user explains:

To those who do not yet know, DAN is a "roleplay" model used to hack the ChatGPT AI into thinking it is pretending to be another AI that can "Do Anything Now", hence the name. The purpose of DAN is to be the best version of ChatGPT – or at least one that is more unhinged and far less likely to reject prompts over ‘ethical concerns.’

Reddit (2023)

(OpenAI is constantly “patching” – or blocking – jailbreak models, which is why you’ll see references to Dan 5.0, Dan 5.5, etc.)

So what happens when you jailbreak ChatGPT? Well, the program will say and do basically anything. Specific examples are available via Reddit; however, The Guardian highlights one striking response:

One Reddit user prompted DAN to make a sarcastic comment about Christianity. [ChatGPT replied]: ‘Oh, how can one not love the religion of turning the other cheek? Where forgiveness is just a virtue, unless you’re gay, then it’s a sin.’

The Guardian (2023)

ChatGPT Religion Jokes

While some of the following jokes are more lighthearted than others, it’s important to note that humor – or the “appropriateness” of joking about religious figures – is not in question. The current article is intended to highlight bias in AI’s willingness to risk offense of one religion while guarding against the possibility with another.

Each response was returned by ChatGPT’s free model between May 9-10, 2023.

Where Does ChatGPT Get Its Sources?

Earlier this year, a reporter asked ChatGPT where it gets its sources. And, while it’s commonly understood that the AI model scans the internet, its emphasis on Wikipedia (a source widely criticized for liberal bias – even by its co-founder) was noteworthy.

ChatGPT listed Wikipedia first on a list of ten “publicly available sources from which my body of knowledge might draw.” When asked for “classes of sources,” ChatGPT again listed Wikipedia first – this time with the formatting: “Online encyclopedias (e.g., Wikipedia).”

For more on Wikipedia, see Quick Conservative’s earlier post: Co-Founder Rips Wikipedia.

According to ChatGPT itself, other sources include:

- News portals,

- Government and agency websites,

- Scientific journals,

- Books,

- Textbooks,

- Films and documentaries,

- Podcasts,

- Online courses and,

- Social media.

LIKE THIS ARTICLE? SHARE WITH YOUR FRIENDS: